Covid, Capitalism, Friedrich Engels and Boris Johnson

By Colin Todhunter

Source: Global Research

“And thus it renders more and more evident the great central fact that the cause of the miserable condition of the working class is to be sought, not in these minor grievances, but in the capitalistic system itself.” Friedrich Engels, The Condition of the Working Class in England (1845) (preface to the English Edition, p.36)

The IMF and World Bank have for decades pushed a policy agenda based on cuts to public services, increases in taxes paid by the poorest and moves to undermine labour rights and protections.

IMF ‘structural adjustment’ policies have resulted in 52% of Africans lacking access to healthcare and 83% having no safety nets to fall back on if they lose their job or become sick. Even the IMF has shown that neoliberal policies fuel poverty and inequality.

In 2021, an Oxfam review of IMF COVID-19 loans showed that 33 African countries were encouraged to pursue austerity policies. The world’s poorest countries are due to pay $43 billion in debt repayments in 2022, which could otherwise cover the costs of their food imports.

Oxfam and Development Finance International (DFI) have also revealed that 43 out of 55 African Union member states face public expenditure cuts totalling $183 billion over the next five years.

According to Prof Michel Chossudovsky of the Centre for Research on Globalization, the closure of the world economy (March 11, 2020 Lockdown imposed on more than 190 countries) has triggered an unprecedented process of global indebtedness. Governments are now under the control of global creditors in the post-COVID era.

What we are seeing is a de facto privatisation of the state as governments capitulate to the needs of Western financial institutions.

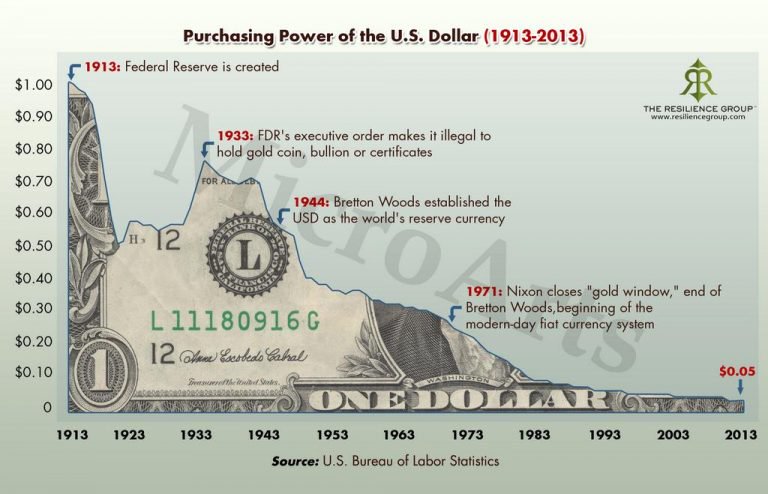

Moreover, these debts are largely dollar-denominated, helping to strengthen the US dollar and US leverage over countries.

It raises the question: what was COVID really about?

Millions have been asking that question since lockdowns and restrictions began in early 2020. If it was indeed about public health, why close down the bulk of health services and the global economy knowing full well what the massive health, economic and debt implications would be?

Why mount a military-style propaganda campaign to censor world-renowned scientists and terrorise entire populations and use the full force and brutality of the police to ensure compliance?

These actions were wholly disproportionate to any risk posed to public health, especially when considering the way ‘COVID death’ definitions and data were often massaged and how PCR tests were misused to scare populations into submission.

Prof Fabio Vighi of Cardiff University implies we should have been suspicious from the start when the usually “unscrupulous ruling elites” froze the global economy in the face of a pathogen that targets almost exclusively the unproductive (the over 80s).

COVID was a crisis of capitalism masquerading as a public health emergency.

Capitalism

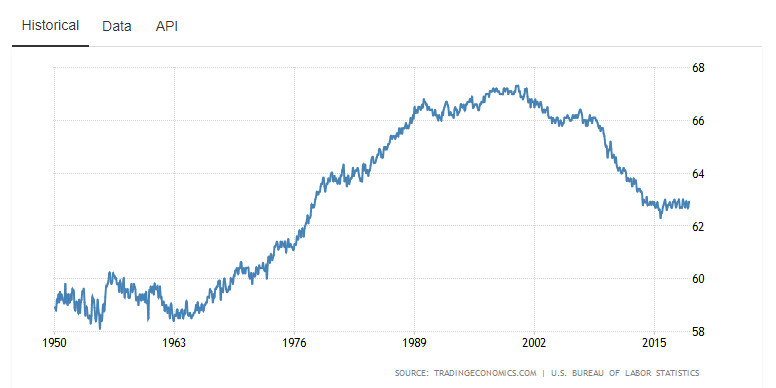

Capitalism needs to keep expanding into or creating new markets to ensure the accumulation of capital to offset the tendency for the general rate of profit to fall. The capitalist needs to accumulate capital (wealth) to be able to reinvest it and make further profits. By placing downward pressure on workers’ wages, the capitalist extracts sufficient surplus value to be able to do this.

But when the capitalist is unable to sufficiently reinvest (due to declining demand for commodities, a lack of investment opportunities and markets, etc), wealth (capital) over accumulates, devalues and the system goes into crisis. To avoid crisis, capitalism requires constant growth, markets and sufficient demand.

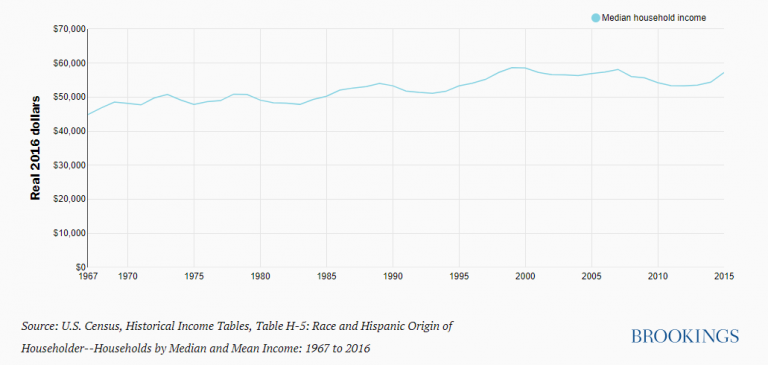

According to writer Ted Reese, the capitalist rate of profit has trended downwards from an estimated 43% in the 1870s to 17% in the 2000s. Although wages and corporate taxes have been slashed, the exploitability of labour was increasingly insufficient to meet the demands of capital accumulation.

By late 2019, many companies could not generate sufficient profit. Falling turnover, limited cashflows and highly leveraged balance sheets were prevalent.

Economic growth was weakening in the run up to the massive stock market crash in February 2020, which saw trillions more pumped into the system in the guise of ‘COVID relief’.

To stave off crisis up until that point, various tactics had been employed.

Credit markets were expanded and personal debt increased to maintain consumer demand as workers’ wages were squeezed. Financial deregulation occurred and speculative capital was allowed to exploit new areas and investment opportunities. At the same time, stock buy backs, the student debt economy, quantitative easing and massive bail outs and subsidies and an expansion of militarism helped to maintain economic growth.

There was also a ramping up of an imperialist strategy that has seen indigenous systems of production abroad being displaced by global corporations and states pressurised to withdraw from areas of economic activity, leaving transnational players to occupy the space left open.

While these strategies produced speculative bubbles and led to an overevaluation of assets and increased both personal and government debt, they helped to continue to secure viable profits and returns on investment.

But come 2019, former governor of the Bank of England Mervyn King warned that the world was sleepwalking towards a fresh economic and financial crisis that would have devastating consequences. He argued that the global economy was stuck in a low growth trap and recovery from the crisis of 2008 was weaker than that after the Great Depression.

King concluded that it was time for the Federal Reserve and other central banks to begin talks behind closed doors with politicians.

That is precisely what happened as key players, including BlackRock, the world’s most powerful investment fund, got together to work out a strategy going forward. This took place in the lead up to COVID.

Aside from deepening the dependency of poorer countries on Western capital, Fabio Vighi says lockdowns and the global suspension of economic transactions allowed the US Fed to flood the ailing financial markets (under the guise of COVID) with freshly printed money while shutting down the real economy to avoid hyperinflation. Lockdowns suspended business transactions, which drained the demand for credit and stopped the contagion.

COVID provided cover for a multi-trillion-dollar bailout for the capitalist economy that was in meltdown prior to COVID. Despite a decade or more of ‘quantitative easing’, this new bailout came in the form of trillions of dollars pumped into financial markets by the US Fed (in the months prior to March 2020) and subsequent ‘COVID relief’.

The IMF, World bank and global leaders knew full well what the impact on the world’s poor would be of closing down the world economy through COVID-related lockdowns.

Yet they sanctioned it and there is now the prospect that in excess of a quarter of a billion more people worldwide will fall into extreme levels of poverty in 2022 alone.

In April 2020, the Wall Street Journal stated the IMF and World Bank faced a deluge of aid requests from scores of poorer countries seeking bailouts and loans from financial institutions with $1.2 trillion to lend.

In addition to helping to reboot the financial system, closing down the global economy deliberately deepened poorer countries’ dependency on Western global conglomerates and financial interests.

Lockdowns also helped accelerate the restructuring of capitalism that involves smaller enterprises being driven to bankruptcy or bought up by monopolies and global chains, thereby ensuring continued viable profits for Big Tech, the digital payments giants and global online corporations like Meta and Amazon and the eradication of millions of jobs.

Although the effects of the conflict in Ukraine cannot be dismissed, with the global economy now open again, inflation is rising and causing a ‘cost of living’ crisis. With a debt-ridden economy, there is limited scope for rising interest rates to control inflation.

But this crisis is not inevitable: current inflation is not only induced by the liquidity injected into the financial system but also being fuelled by speculation in food commodity markets and corporate greed as energy and food corporations continue to rake in vast profits at the expense of ordinary people.

Resistance

However, resistance is fertile.

Aside from the many anti-restriction/pro-freedom rallies during COVID, we are now seeing a more strident trade unionism coming to the fore – in Britain at least – led by media savvy leaders like Mick Lynch, general secretary of the National Union of Rail, Maritime and Transport Workers (RMT), who know how to appeal to the public and tap into widely held resentment against soaring cost of living rises.

Teachers, health workers and others could follow the RMT into taking strike action.

Lynch says that millions of people in Britain face lower living standards and the stripping out of occupational pensions. He adds:

“COVID has been a smokescreen for the rich and powerful in this country to drive down wages as far as they can.”

Just like a decade of imposed ‘austerity’ was used to achieve similar results in the lead up to COVID.

The trade union movement should now be taking a leading role in resisting the attack on living standards and further attempts to run-down state-provided welfare and privatise what remains.

The strategy to wholly dismantle and privatise health and welfare services seems increasingly likely given the need to rein in (COVID-related) public debt and the trend towards AI, workplace automisation and worklessness.

This is a real concern because, following the logic of capitalism, work is a condition for the existence of the labouring classes. So, if a mass labour force is no longer deemed necessary, there is no need for mass education, welfare and healthcare provision and systems that have traditionally served to reproduce and maintain labour that capitalist economic activity has required.

In 2019, Philip Alston, the UN rapporteur on extreme poverty, accused British government ministers of the “systematic immiseration of a significant part of the British population” in the decade following the 2008 financial crash.

Alston stated:

“As Thomas Hobbes observed long ago, such an approach condemns the least well off to lives that are ‘solitary, poor, nasty, brutish, and short’. As the British social contract slowly evaporates, Hobbes’ prediction risks becoming the new reality.”

Post-COVID, Alston’s words carry even more weight.

As this article draws to a close, news is breaking that Boris Johnson has resigned as prime minister. A remarkable PM if only for his criminality, lack of moral foundation and double standards – also applicable to many of his cronies in government.

With this in mind, let’s finish where we began.

“I have never seen a class so deeply demoralised, so incurably debased by selfishness, so corroded within, so incapable of progress, as the English bourgeoisie…

For it nothing exists in this world, except for the sake of money, itself not excluded. It knows no bliss save that of rapid gain, no pain save that of losing gold.

In the presence of this avarice and lust of gain, it is not possible for a single human sentiment or opinion to remain untainted.” Friedrich Engels, The Condition of the Working Class in England (1845), p.275